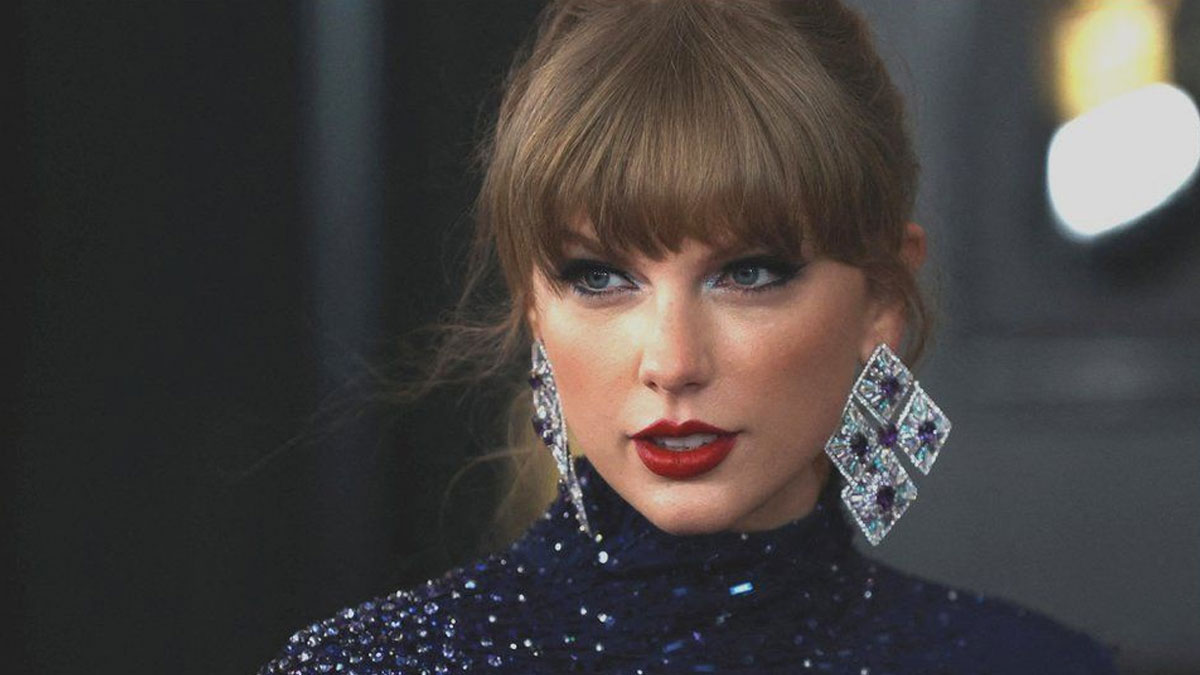

Social media platform X has blocked searches for Taylor Swift after explicit AI-generated images of the singer began circulating on the site.

In a statement X's head of business operations Joe Benarroch said it was a "temporary action" to prioritise safety.

When searching for Swift on the site, a message appears that says: "Something went wrong. Try reloading."

Fake graphic images of the singer appeared on the site last week.

Some went viral and were viewed millions of times, prompting alarm from US officials and fans of the singer.

Posts and accounts sharing the fake images were flagged by her fans, who populated the platform with real images and videos of her, using the words "protect Taylor Swift".

X, formerly Twitter, released a statement saying that posting non-consensual nudity on the platform is "strictly prohibited".

They say they have a zero-tolerance policy towards such content, and their teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them.

Source: BBC

Stay tuned for the latest news on our radio stations